ChainSyncer cookbook

A collection of recipes for using the ChainSyncer module

As promised in the last article, I will write about some best practices for using the ChainSyncer module.

Maximum Consistency

Due to the asynchronous nature of the module and the overhead of most RPC servers, some tricks are required when working with the module in order to maintain the consistency with the blockchain.

Here is an example: We have an abstract staking contract.

// SPDX-License-Identifier: MIT

pragma solidity 0.8.17;

/**

* Some very-very abstract staking contract without any sense

*/

contract Staking {

struct Pool {

uint256 total_staked;

}

mapping(uint256 => Pool) pools;

event Staked(

uint256 indexed pool_id,

address staked_by,

uint256 current_total_staked

);

function stake(uint256 pool_id_, uint256 amount_) external {

pools[pool_id_].total_staked += amount_;

emit Staked(pool_id_, msg.sender, pools[pool_id_].total_staked);

}

}

The task is to track the number of tokens in the pool under conditions of high contract load (let’s say it is very popular).

We will do this with the help of the Staked event.

// Example using Mongoose

// This is just an abstract example, dont try to copy-paste it

const Mongoose = require('mongoose');

const { ChainSyncer } = require('chain-syncer');

const { MongoDBAdapter } = require('@chainsyncer/mongodb-adapter');

// where will we store the pulled events

await Mongoose.connect(process.env['MONGO_SRV']);

const adapter = new MongoDBAdapter(Mongoose.connection.db);

const syncer = new ChainSyncer(adapter, { /* ... some options ... */ })

syncer.on('Staking.Staked', async (

{ global_index }, // will need in the next example

pool_id,

staked_by, // actually dont need

current_total_staked,

) => {

await StakingPool.updateOne({

_id: pool_id,

}, {

$set: {

total_staked: Number(current_total_staked),

},

});

});

It is important to ensure that our handlers are idempotent. This is possible only if you design events in such a way that they store the current data snapshot: in our case, total_staked. In contrast, if we store amount_, then we will have to go through all the events just to find out how much is currently staked. This will greatly complicate the code.

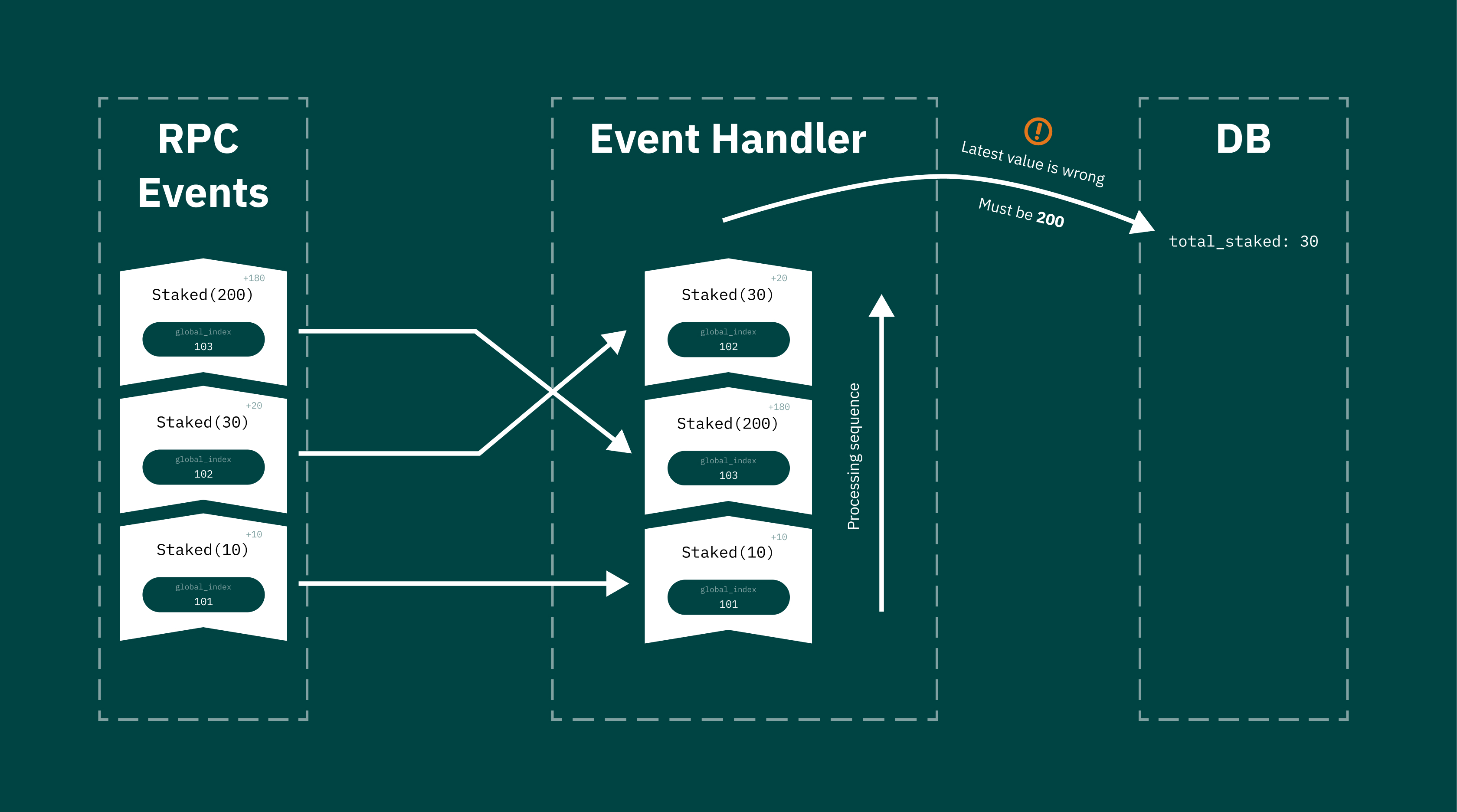

A rather serious bug hid in the code above. Since the module processes events in parallel in batches (for maximum performance), it is likely that an earlier event will be processed after a later event. As a result, total_staked on your backend will be less than it should be.

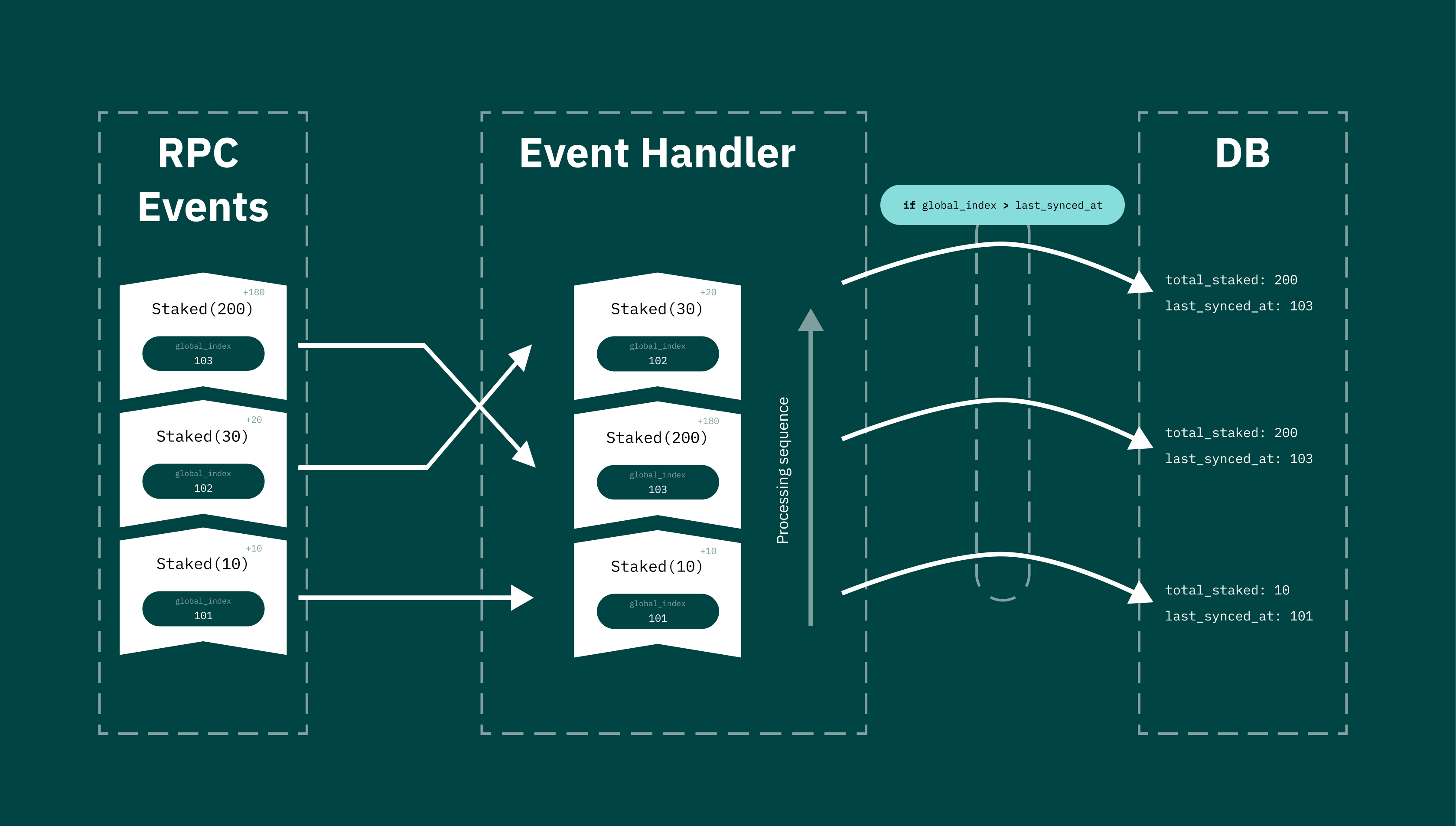

This is where the global_index parameter comes into play. With it, we can determine the most current total_staked state of the pool.

By making some changes to our handler, we can remove the risk of synchronization errors:

syncer.on('Staking.Staked', async (

{ global_index }, // we need only global_index

pool_id,

staked_by, // actually dont need

current_total_staked,

) => {

await StakingPool.updateOne({

_id: pool_id,

// we need to update total_staked only if global_index is greater than the last one saved

last_synced_at: { $lt: global_index },

}, {

$set: {

total_staked: Number(current_total_staked),

// dont't forget to save

last_synced_at: global_index,

},

});

});

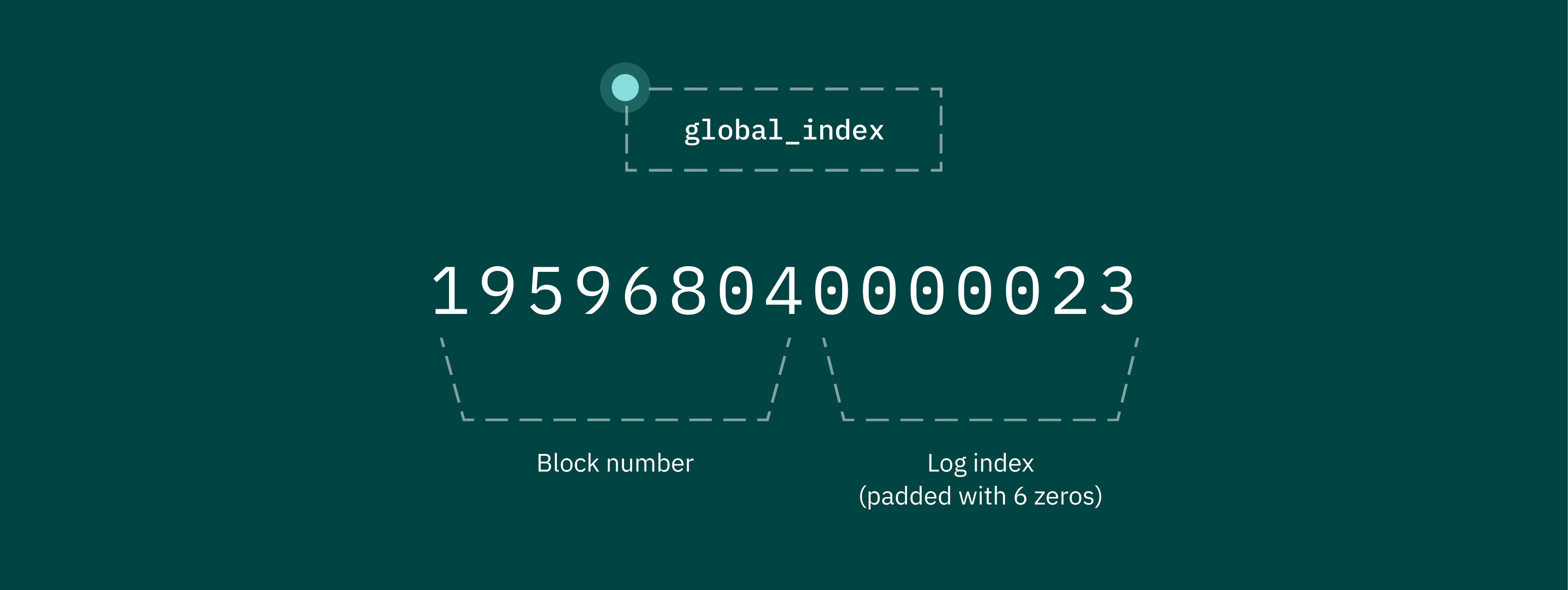

You are probably wondering: Where does this parameter come from?

It is collected by ChainSyncer for each event by merging blockNumber and logIndex.

Thus, we get something like an incremental unique ID for each event.

Postponement of Events

Another feature designed to help deal with module asynchrony problems is saving the sequence of event processing.

I’ll give you an example. You have a sword object for your crypto game that is stored entirely in a contract.

// SPDX-License-Identifier: MIT

pragma solidity 0.8.17;

contract Swords {

struct Sword {

uint256 damage;

bool is_enchanted;

}

Sword[] public swords;

event Created(

uint256 sword_id,

uint256 damage

);

event Enchanted(

uint256 sword_id

);

function _create(uint256 damage_) internal returns (uint256) {

uint256 sword_id = swords.length;

swords.push(Sword(damage_, false));

return sword_id;

}

function create(uint256 damage_) external {

uint256 sword_id = _create(damage_);

emit Created(sword_id, damage_);

}

function createAndEnchant(uint256 damage_) external {

uint256 sword_id = _create(damage_);

swords[sword_id].is_enchanted = true;

emit Created(sword_id, damage_);

emit Enchanted(sword_id);

}

}

We also have a backend that keeps track of the status of each sword in the contract.

syncer.on('Swords.Created', async (

{ global_index, block_timestamp }, // don't need

sword_id,

damage

) => {

// uint256 that comes from event syncer converts to string, but we need a number

sword_id = Number(sword_id);

damage = Number(damage);

// getting the sword by id

const sword = await Sword.findOne({ _id: sword_id });

// will create a new one, if no sword found in our DB

if(!sword) {

await Sword.create({

_id: sword_id,

damage: damage,

is_enchanted: false,

});

}

});

syncer.on('Swords.Enchanted', async (

{ global_index, block_timestamp }, // don't need

sword_id,

) => {

sword_id = Number(sword_id);

await Sword.updateOne({

_id: sword_id,

}, {

$set: {

is_enchanted: true, // updating owner address

}

});

});

As I described above, events are processed in parallel, and in this example, we need a clear sequence of execution of handlers. Since Enchanted and Created are thrown in the same transaction, there is an extremely high risk that the Enchanted event will be processed before the Created event, which will lead to a loss of consistency.

To eliminate the possibility of this bug, ChainSyncer has a civil mechanism for postponing events: if false is returned out of the handler, then the event processing is postponed for the next tick.

syncer.on('Swords.Enchanted', async (

{ global_index, block_timestamp }, // don't need

sword_id,

) => {

sword_id = Number(sword_id);

// getting the sword by id

const sword = await Sword.findOne({ _id: sword_id });

// if no sword found in our DB,

// we will postpone this event till the sword will be created

if(!sword) {

return false;

}

await Sword.updateOne({

_id: sword_id,

}, {

$set: {

is_enchanted: true, // updating owner address

}

});

});

Using this mechanism, we get something like an event dependency graph.

That’s all for now. By using these practices, you will get both high performance and reliable consistency, so you can sleep soundly.

In the next article I will tell you about more in-depth work using the module — scanning individual network blocks, scanner mode, and maybe something more.

There is still a war going on in Ukraine and at this moment the support of each and everyone is really important. Every dollar strongly brings Ukraine closer to victory 🇺🇦. How can you help.